Throughout its 16-year history, the Munich Security Conference typically features a lot of companies trying to win defense contracts, and a summary dismissal of anything presented by the Russian Federation.

But in a change of pace, the 16th edition of the conference concluded with an agreement drafted by a variety of big tech companies to try and prevent the use of artificial intelligence to deceive and sow chaos during national elections.

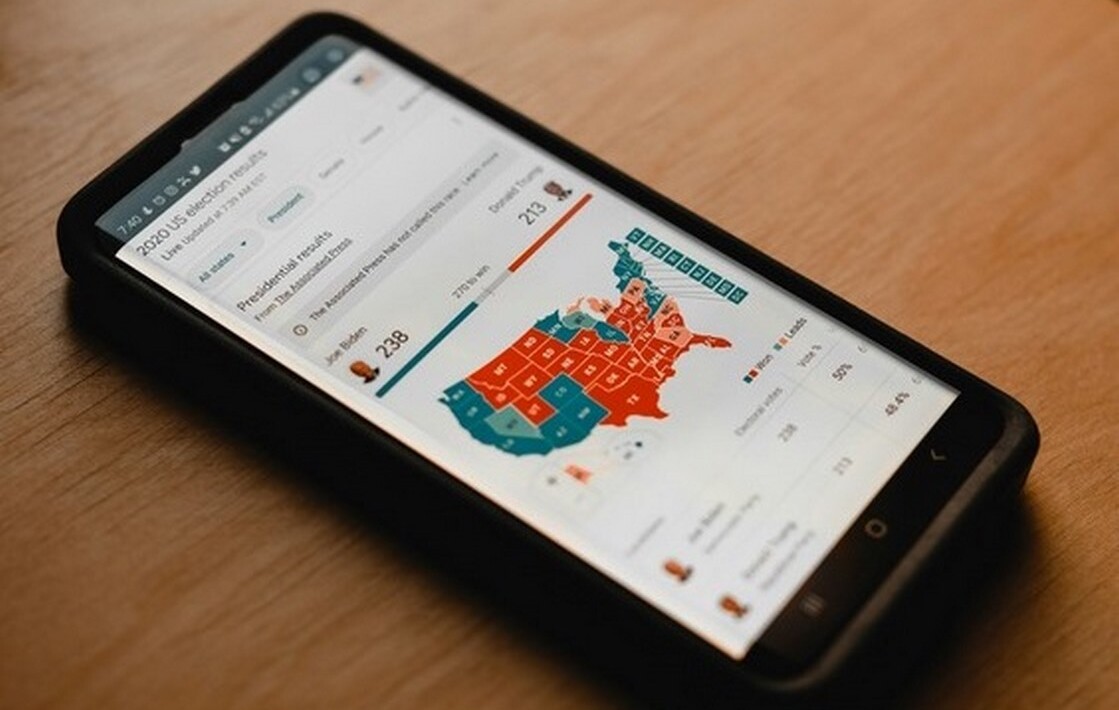

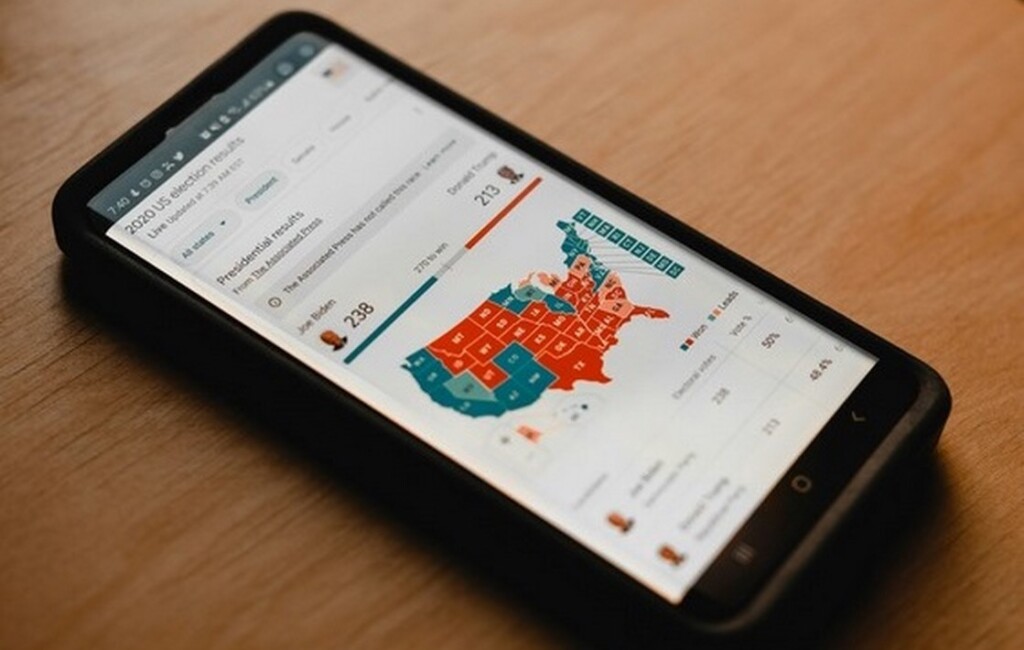

As the companies point out, 2024 will bring more elections to more people than any year in history, with more than 40 countries, including two of the three largest democracies in the world, and more than four billion people choosing their leaders and representatives through the right to vote.

Their intention with the agreement is to combat deceptive AI election content such as “convincing AI-generated audio, video, and images that deceptively fake or alter the appearance, voice, or actions of political candidates, election officials, and other key stakeholders in a democratic election, or that provide false information to voters about when, where, and how they can lawfully vote.”

Signees include Meta, OpenAI, X, TikTok, Microsoft, McAfee, and Amazon.

Because the last 8 years has featured several major events that have put social media censorship and government infiltration and control of the internet never far from the center of debate around technology and its role in society, the companies were quick to point out that such AI-generated content, should it be present, won’t be taken down outright (presumably unless it violates other terms of service related to showing violent or graphic content) but rather labeled as AI-generated so people can do their own fact-checking against what the AI-generated image or video says or claims.

Three huge democracies have already gone to the polls to choose their executives: Indonesia, Pakistan, and Bangladesh, while Taiwan has also held elections. 9 months from now it will be the United States’ turn, but dozens of others will also take place.

MORE CORPORATE RESPONSIBILITY: New Google Geothermal Electricity Project Could Be a Milestone for Clean Energy

Euro News, reporting on the agreement, heard from industry members and others who found it positive, noting that all these companies have different terms of use, and so by nature it had to be broad and unspecific.

“[No] one in the industry thinks that you can deal with a whole new technological paradigm by sweeping things under the rug and trying to play whack-a-mole and finding everything that you think may mislead someone,” Nick Clegg, president of global affairs for Meta, which runs Instagram, Facebook, and WhatsApp.

“I think we should give credit where credit is due, and acknowledge that the companies do have a vested interest in their tools not being used to undermine free and fair elections,” said Rachel Orey, senior associate director of the Elections Project at the Bipartisan Policy Center, who also noted the language isn’t as strong as she would have liked.

POSITIVE ELECTION STORIES: Politician Declared Winner But Gives Up His Seat When He Learns of Election Interference on his Behalf

“As leaders and representatives of organizations that value and uphold democracy, we recognize the need for a whole-of-society response to these developments throughout the year,” the companies write in their agreement.

“We are committed to doing our part as technology companies, while acknowledging that the deceptive use of AI is not only a technical challenge, but a political, social, and ethical issue and hope others will similarly commit to action across society.”

SHARE This Story On Social Media (Just Don’t Use AI)…